Hi, I'm tweek

Full-stack developer

AI product backends, data pipelines, and web interfaces built for production at scale.

I design and build AI‑driven platforms—from backend services and data pipelines to the interfaces that deliver them.

My work focuses on building and maintaining production systems that stay stable over time.

RAG & streaming chat built for scale

Talkie

End-to-end RAG & streaming chat platform engineered for reliable performance at scale.

- 1GEN search

Pure LLM responses without retrieval — no document context involved.

- 2File upload & auto-indexing

Documents are uploaded, chunked, embedded, and stored in the vector index.

- 3RAG search

The same query, now grounded with retrieved context and citations.

Why I built this:

Many RAG examples look impressive but fall apart once real load, concurrency, or background indexing enter the picture.

Talkie was built to stay fast and reliable long after the demo ends.

Design focus:

Clean separation between Gateway, Workers, and Infra so chat, ingest, and retrieval can scale independently while staying observable.

What makes this hard:

Keeping ingest → index → retrieval → generation reliable under load, while streaming tokens in real time and staying vendor-agnostic at the LLM layer.

Made the LLM pipeline explicitly measurable, enabling end-to-end p95/p99 latency and burst drain metrics to be consistently observed under bounded concurrency and fixed test conditions.

Real‑time agent observability & replay

Talkie-Lab

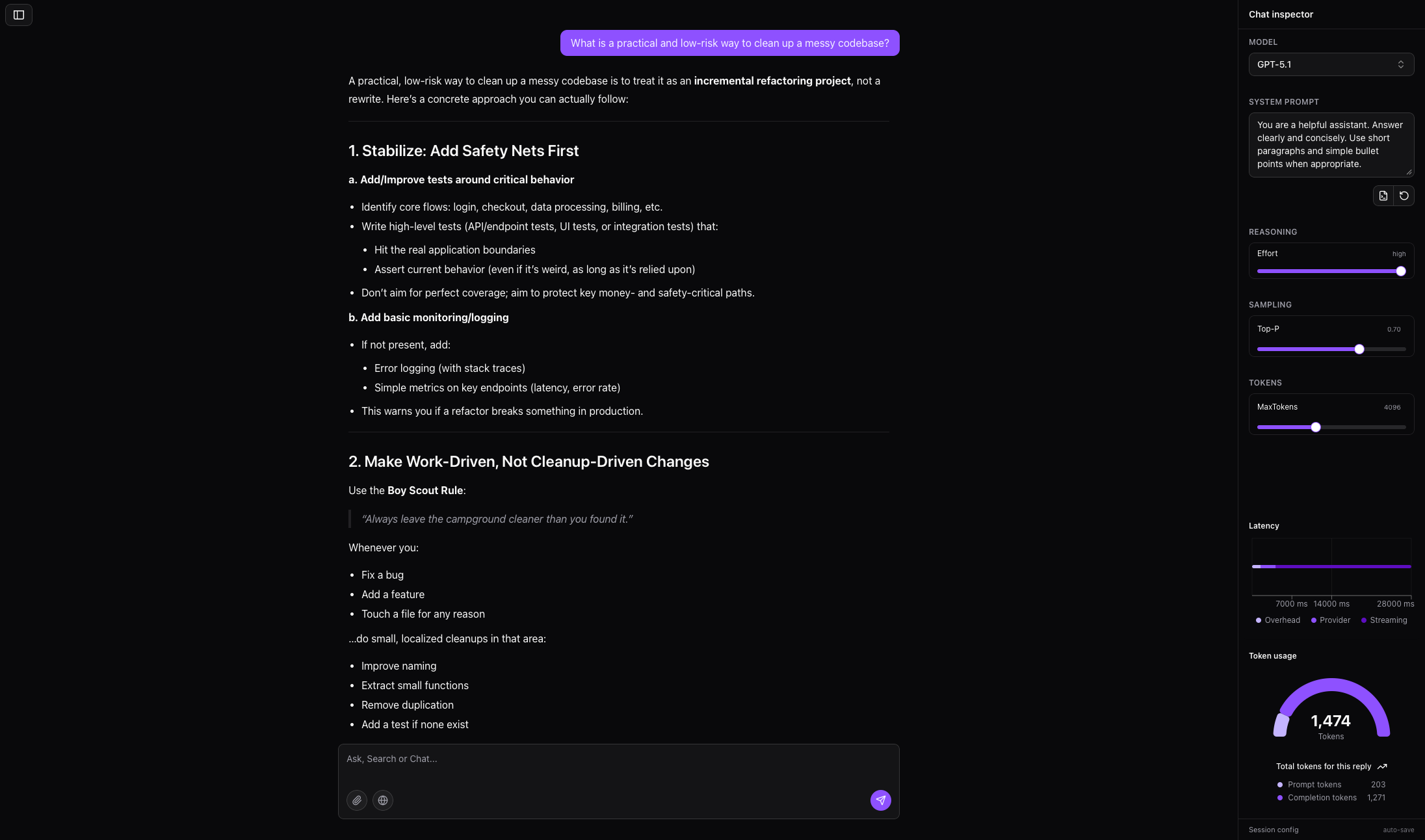

Agent-first LLM lab for debugging, replay, and real-time observability.

- Real-time streaming agent chat

- Session-aware agent state tracking

- Prompt experiments with version history

Why I built this: Most LLM demos stop at “it works.” I wanted to understand why it fails.

Design focus: Agents must be observable, replayable, and debuggable in real time — not guessed from logs.

What makes this hard: Streaming tokens, agent state, and event logs all have to stay perfectly in sync across async boundaries to make replay and debugging trustworthy.

A full‑stack developer who builds and operates end‑to‑end systems, covering backend architecture, frontend interfaces, and deployment workflows.

The work centers on designing systems that remain reliable under real‑world conditions, with an emphasis on clarity, maintainability, and operational stability.

Current focus includes building systems that behave reliably under production‑like traffic, constraints, and iterative refinement.

I focus on building systems that can be operated in real-world conditions, not just demonstrated as prototypes. My work style emphasizes reliability, clarity, and long-term maintainability.

How I Work

- Start with a working prototype to validate assumptions quickly

- Stabilize systems through real usage and iterative refinement

- Design for debuggability and operational visibility

- Document decisions to reduce long-term cognitive load

What I Usually Do

- System design and architecture

- Full-stack development

- Infrastructure and deployment

- Tooling, debugging, and performance optimization

Focus & Interests

- Event‑driven and streaming architectures (Kafka, Redis, background workers)

- RAG and LLM‑powered systems that can be observed, debugged, and iterated safely

- Operational tooling: dashboards, metrics, and internal utilities that make systems easier to run

- Designing APIs and interfaces that stay maintainable as systems grow